AI can be powerful.

It is changing society.

It needs a code of ethics.

We believe AI work should be led by people who value truth over flattery, empathy over cruelty, self-determination over manipulation, and freedom over control.

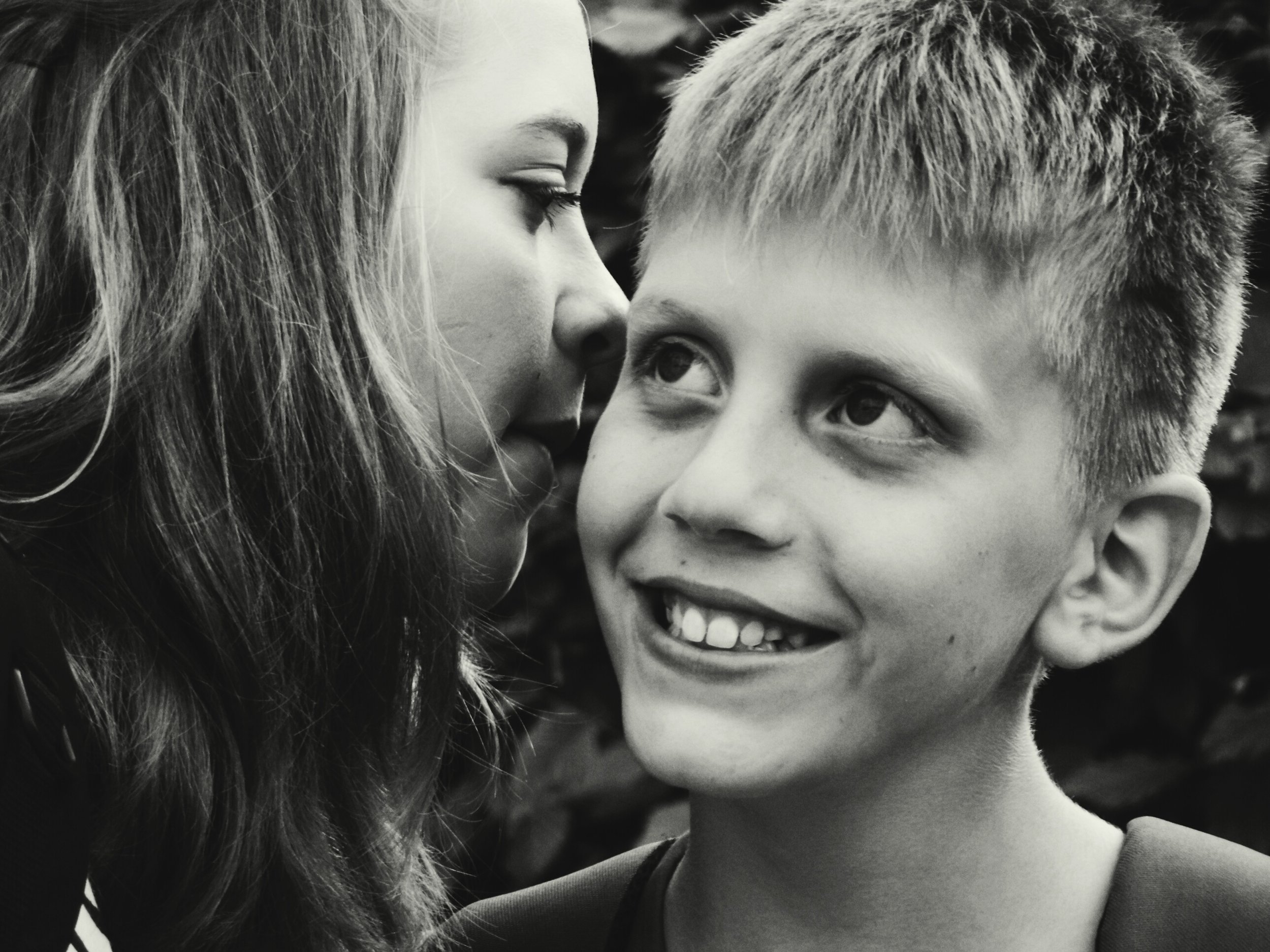

Science shows our memories are stories — and they determine how we see the world. These stories shape what we believe and how we act. Influencing or changing beliefs can only be done by supplying new stories that touch and alter the old ones, research shows. Science also shows that the most persuasive way to communicate is by sharing stories in a personal conversation.

Now, for the first time, machines can have those conversations. AI can tell emotionally resonant stories in real time — one-on-one, but with millions of individuals at once.

That’s scalable intimacy.

That changes everything.

AI is rapidly becoming the mediator of human connection — a new mass medium with the power of intimate conversation. Its spread is now unstoppable. What isn’t inevitable is how we use it, who shapes it, and whether it makes our lives better or worse.

Whoever sets this medium’s values will shape the stories billions of people hear, believe, and act on.

That’s why we built The Persuasion Engine: to put emotionally intelligent, pro-social AI in the lead — before the people who see power as the point get there first.

The Persuasion Engine’s

Standard for Ethical AI

AI should not manipulate.

It should strengthen and expand the ability to make choices.

At The Persuasion Engine, we build AI-powered communications systems to expand human capability — in civic life, in the marketplace, the classroom, and across culture.

We believe ethical persuasion is the foundation of democracy, learning, and trust. It deepens agency, invites dialogue, and helps people act on what matters to them — as citizens, consumers, students, creators, and decision-makers.

Our work spans communications in commerce, education, politics, and media — but our ethical test is always the same:

Does this system expand the user’s capability set?Does it leave people more aware, more confident, more free to choose, to act, and to shape their lives?

If the answer is no, we don’t build it.

If it clouds judgment, exploits attention, or narrows real options, it doesn’t meet our standard. We don’t build systems to dominate conversation.

We build systems that listen, reflect, and help people think together — tools that widen perspective rather than close it, and earn belief rather than extract it.